Caffe is a deep learning framework popular in Linux with Python or Matlab interface. I just managed to compile Caffe in Windows, and I think it’s worth sharing. niuzhiheng’s GitHub was of great help. This repository is organized in a way that future update merging from Caffe’s GitHub would be very straight forward. For quick setup and usage go to my GitHub.

For quick and dirty start, go to Caffe + vs2013 + OpenCV in Windows Tutorial (I) – Setup, where I’ll provide 1) the modified file that can be compiled in windows right away; 2) the vs2013 project that I’m currently using.

Below is my step by step record to compile Caffe from source in Windows 8.1 + vs2013 + OpenCV 2.4.9 + CUDA 6.5.

- Download source from Caffe’s GitHub and unzip.

- Create a new project in Visual Studio 2013.

- File -> New -> Project

- Choose Win32 Console Application

- Set location to the root of Caffe

- Change Name to caffe (the generated exe file later is named after the project name, so please use this low case word)

- Click OK

- Check Empty project, and then Finish

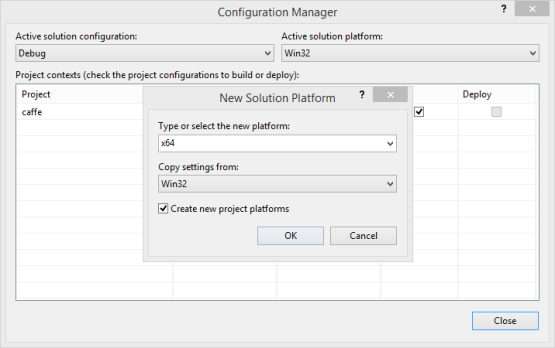

- Change the platform from Win32 to

- Build -> Configuration Manager -> Active solution platform -> new -> x64 -> OK

- An empty project called caffe is generated into the root of Caffe now. To compile big project like Caffe, good practice is to compile a few *.cpp files first and figure out dependencies one by one.

- Drag files in caffe/src/caffe to Source Files in VS.

- Let’s set some directories of the project.

- In Property Manager, both Debug|x64 and Release|x64 need to be set:

- In Configuration Properties -> General, set Output Directory to ‘../bin’. The generated exe files will be easy to use later. Change for both Debug and Release mode.

- In Configuration Properties -> C/C++ -> General, edit Additional Include Directories (Both Debug and Release) to include:

../include; ../src;

- Make sure to check Inherit from parent or project defaults.

- Now let’s fix dependencies one by one: (My pre-built 3rdparty folder can be downloaded: 3rdparty.zip, if you are using Windows 64bit + vs2013)

- CUDA 6.5

- Download and install (You have to have a GPU on your PC lol)

- OpenCV 2.4.9 + CUDA 6.5

- Boost

- Pre-built ones works fine. I used “boost_1_56_0-msvc-12.0-64.exe”.

- OpenBLAS

- Pre-built ones works fine. I used “OpenBLAS-v0.2.12-Win64-int64.zip”.

- Update: required 3 dll files (libgcc_s_seh-1.dll, libgcc_s_sjlj-1.dll, libgfortran-3.dll, libquadmath-0.dll) can be found here: OpenBlas_Required_DLL.zip (x64)

- Add OpenCV + CUDA + Boost into the project:

- I used to put these 3 libraries outside Caffe folder because they are so useful.

- Add include path to Additional Include Directories: (Both Debug and Release)

$(CUDA_PATH_V6_5)\include $(OPENCV_X64_VS2013_2_4_9)\include $(OPENCV_X64_VS2013_2_4_9)\include\opencv $(BOOST_1_56_0)

- CUDA_PATH_V6_5 is added by CUDA initialization. OPENCV_X64_VS2013_2_4_9 and BOOST_1_56_0 need to be added to the Environment Variables Also, the bin (e.g. /to/opencv-2.4.9/x64-vs2013/bin) folder of OpenCV need to be added to Path. You may need to log off to enable them.

- Add lib path to Additional Library Directories in Configuration Properties -> Linker -> General: (Both Debug and Release)

$(CUDA_PATH_V6_5)\lib\$(PlatformName) $(OPENCV_X64_VS2013_2_4_9)\lib $(BOOST_1_56_0)\lib64-msvc-12.0

- Add libraries to Additional Dependencies in Configuration Properties -> Linker -> Input

- Debug:

opencv_core249d.lib;opencv_calib3d249d.lib;opencv_contrib249d.lib;opencv_flann249d.lib;opencv_highgui249d.lib;opencv_imgproc249d.lib;opencv_legacy249d.lib;opencv_ml249d.lib;opencv_gpu249d.lib;opencv_objdetect249d.lib;opencv_photo249d.lib;opencv_features2d249d.lib;opencv_nonfree249d.lib;opencv_stitching249d.lib;opencv_video249d.lib;opencv_videostab249d.lib;cudart.lib;cuda.lib;nppi.lib;cufft.lib;cublas.lib;curand.lib;%(AdditionalDependencies)

- Release:

opencv_core249.lib;opencv_flann249.lib;opencv_imgproc249.lib;opencv_highgui249.lib;opencv_legacy249.lib;opencv_video249.lib;opencv_ml249.lib;opencv_calib3d249.lib;opencv_objdetect249.lib;opencv_stitching249.lib;opencv_gpu249.lib;opencv_nonfree249.lib;opencv_features2d249.lib;cudart.lib;cuda.lib;nppi.lib;cufft.lib;cublas.lib;curand.lib;%(AdditionalDependencies)

- GFlags + GLog + ProtoBuf + LevelDB

- Download source code from the internet.

- Use CMake to generate .sln for vs2013. Remember to set “CMAKE_INSTALL_PREFIX”, which is where the output files will be generated by build “INSTALL”.

- Build in vs2013. Usually build “BUILD_ALL” first, then “INSTALL”. Both Debug and Release mode.

- Copy compiled files to caffe/3rdparty. Debug versions should be renamed “+d” before copy, e.g. “lib” -> “gflagsd.lib”.

- Google’s code are very good maintained. Nothing much to say.

- HDF5

- Download source code form the internet.

- In CMake, enable HDF5_BUILD_HL_LIB.

- Copy compiled files to caffe/3rdparty.

- LMDB

- Download source code from the internet.

- In Visual Studio 2013, File -> New -> Project From Existing Code…

- In c, a header file called unistd.h is needed. A walk around is found here. Or one can download here: unistd.h, getopt.h and getopt.c.

- The compiled LMDB has some problem which I’ll mention later.

- Copy some .dll files so that caffe can run

- Copy libglog.dll from GLog to caffe/bin.

- Copy libopenblas.dll from OpenBLAS to caffe/bin.

- Copy msvcp120.dll and msvcr120.dll from HDF5 to caffe/bin.

- More paths added to Additional Include Directories (Both Debug and Release):

../3rdparty/include; ../3rdparty/include/openblas; ../3rdparty/include/hdf5; ../3rdparty/include/lmdb;

- More paths added to Additional Library Directories (Both Debug and Release):

../3rdparty/lib

- More files added to Additional Dependencies:

- Debug:

gflagsd.lib;libglog.lib;libopenblas.dll.a;libprotobufd.lib;libprotoc.lib;leveldbd.lib;lmdbd.lib;libhdf5_D.lib;libhdf5_hl_D.lib;Shlwapi.lib;

- Release:

gflags.lib;libglog.lib;libopenblas.dll.a;libprotobuf.lib;libprotoc.lib;leveldb.lib;lmdb.lib;libhdf5.lib;libhdf5_hl.lib;Shlwapi.lib;

- My pre-built 3rdparty folder can be downloaded: 3rdparty.zip, if you are using Windows 64bit + vs2013.

- Now compile “common.cpp” to fix errors.

- Add “#include <process.h>” to common.cpp fix “getpid” error.

- Add “_CRT_SECURE_NO_WARNINGS” to Configuration Properties -> C/C++ -> Preprocessor -> Preprocessor Definitions to fix “fopen_s” error.

- Change the line used “getpid” in common.cpp to fix POSIX error.

// port for Win32 #ifndef _MSC_VER pid = getpid(); #else pid = _getpid(); #endif

- common.cpp should be compiled without error now.

- Now compile “blob.cpp”

- To enable #include “caffe/proto/caffe.pb.h”. We need to generate it from caffe.proto.

- Put proto.exe in caffe/3rdparty/bin folder.

- Put GeneratePB.bat in caffe/scripts folder.

- Add the line below (with the quote mark) to Configuration Properties -> Build Events -> Pre-Build Event -> Command Line:

“../scripts/GeneratePB.bat”

- Right click caffe to build to project, you will see “caffe.pb.h is being generated” and “caffe_pretty_print.pb.h is being generated”

- Now blop.cpp can be compiled without errors.

- Now compile “net.cpp”

- “unistd.h” missing error can be fixed like above. (also included in 3rdparty.zip already)

- “mkstemp” missing error can be fixed according to this (download here: mkstep.h and mkstep.cpp or find in 3rdparty.zip)

- Add “#include “mkstemp.h”” to io.hpp.

- Change the line used “close” the below in io.hpp to fix close error. (Don’t do “#define close _close” in io.hpp. Such define are dangerous in any .hpp files, but acceptable in .cpp files)

#ifndef _MSC_VER close(fd); #else _close(fd); #endif

- Change the line used “mkdtemp” function to below in io.hpp (to use _mktemp_s as a walk-around for mkdtemp):

#ifndef _MSC_VER char* mkdtemp_result = mkdtemp(temp_dirname_cstr); #else errno_t mkdtemp_result = _mktemp_s(temp_dirname_cstr, sizeof(temp_dirname_cstr)); #endif

- Now compile “solver.cpp”

- Add these line to solver.cpp to fix “snprintf” error to below:

// port for Win32 #ifdef _MSC_VER #define snprintf sprintf_s #endif

- Now compile files in caffe/src/layers

- Create a folder in Source Files and drag ONE .cu files in layer folder into it.

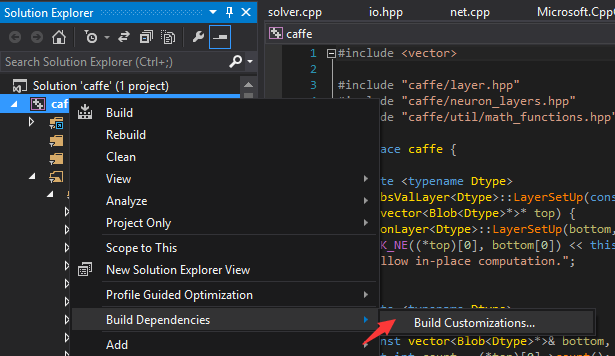

- Enable CUDA 6.5(.targets, .props) in PROJECT -> Build Customization.

- In the property of any .cu file, change Item Type to CUDA C/C++

- Drag the rest files in layer folder to the project. All new added .cu file will have its Item Type Otherwise you have to change them one by one.

- In bnll_layer.cu, change “const float kBNLL_THRESHOLD = 50.;” to “#define kBNLL_THRESHOLD 50.0” to avoid error.

- Now every files in layer folder compiles fine.

- Now compile files in caffe/src/util folder

- Goto ReadProtoFromBinaryFile function. Change “O_RDONLY” to “O_RDONLY | O_BINARY”. When loading a binary file in Windows, you have to specify that it’s binary. Otherwise you might have error loading a *_mean.binaryproto file later.

- Add these lines to io.cpp file to avoid POSIX error:

// port for Win32 #ifdef _MSC_VER #define open _open

#define close _close#endif

- Change “close()” to “_close()” manually expect the “file.close()”.

- Add these lines to math_functions.cpp to fix __builtin_popcount and __builtin_popcountl error:

#define __builtin_popcount __popcnt #define __builtin_popcountl __popcnt

- Now compile files in caffe/src/proto

- Previously 2 .cc files were generated by GeneratePB.bat.

- Create a folder in Source Files and drag 2 .cc files (“caffe.pb.cc” and “caffe_pretty_print.pb.cc”) into it.

- Add “-D_SCL_SECURE_NO_WARNINGS” to Configuration Properties -> C/C++ -> Command Line (Both Debug and Release mode) to fix ‘std::_Copy_impl’ unsafe error.

- Now we have every file compliable in Visual Studio.

- Finally compile caffe.cpp in caffe/tools folder

- Drag caffe/tools/caffe.cpp file into Visual Studio’s Source Files

- Right click project name caffe, and BUILD!

- Now everything should work! If you got some linking error, check the missing function’s name to decide what library is missing.

- Change to Release mode and BUILD again to get a caffe/bin/caffe.exe of Release mode.

- Copy caffe.exe to get a backup, e.g. caffe-backup.exe. For if you build the project again, caffe.exe will be overwritten.

- Let’s do a QUICK TEST on MNIST!

- Get MNIST Dataset

- Put 7za.exe and wget.exe into caffe/3rdparty/bin.

- Put get_mnist.bat in caffe/data/mnist and run.

- You will get 4 files ending with “-ubyte”.

- Generate convert_mnist_data.exe

- Check the project in Visual Studio is in Release|x64.

- Exclude “caffe.cpp” from the project.

- Drag “caffe/examples/mnist/convert_mnist_data.cpp” to the project.

- Add these lines in convert_mnist_data.cpp:

// port for Win32 #ifdef _MSC_VER #include <direct.h> #define snprintf sprintf_s #endif

- Change “mkdir” to “_mkdir” in convert_mnist_data.cpp:

// port for Win32 #ifndef _MSC_VER CHECK_EQ(mkdir(db_path, 0744), 0) << “mkdir ” << db_path << “failed”; #else CHECK_EQ(_mkdir(db_path), 0) << “mkdir ” << db_path << “failed”; #endif

- The “potentially uninitialized local pointer variable” error can be fixed by initialize db, mdb_env and mdb_txn with NULL in convert_mnist_data.cpp. Change “MDB_env *mdb_env;” to “MDB_env *mdb_env = NULL;”, and similar for “mdb_txn” and “db”.

- BUILD the project to get caffe.exe in caffe/bin. Rename it to convert_mnist_data.exe.

- Create LevelDB data for training

- Put create_mnist.bat in caffe/examples/mnist and run. Here we generate mnist_train_leveldb and mnist_test_leveldb. If lmdb was used as backend, error occurs. Fortunately, leveldb works fine. It would be great if someone can fix the error of lmdb.

- Let’s get caffe.exe back from the copied caffe-backup.exe if you copied it before.

- Now modify caffe/examples/mnist/lenet_train_test.prototxt, substitute “lmdb” with “leveldb”, because we generate “leveldb” data instead of “lmdb”.

- Do training

- Put train_lenet.bat in caffe/examples/mnist and run. Cool! The training is on! The accuracy is around 0.98 after hundreds of iterations.

Update – 2015.1.26

- The above steps didn’t include cuDNN acceleration.

- One can add “USE_CUDNN” to Preprocessor of the project to enable cuDNN. (Currently Caffe works with cuDNN v1. The set-up is pretty straight forward. Noted that the “Code Generation” for CUDA should be at least “compute_30, sm_30”.)

- My practice showed that for LeNet layout, testing 800 images with the size of 32*32 (excluding the time for reading input images):

- CPU takes around 400~500 ms

- GPU takes around 50 ms

- cuDNN takes around 15 ms

I have some problem when building your code on x64 debug mode. Can you help me solve the two problems?

Thanks!

1>common.obj : error LNK2019: unresolved external symbol “__declspec(dllimport) void __cdecl google::InstallFailureSignalHandler(void)” (__imp_?InstallFailureSignalHandler@google@@YAXXZ) referenced in function “void __cdecl caffe::GlobalInit(int *,char * * *)” (?GlobalInit@caffe@@YAXPEAHPEAPEAPEAD@Z)

1>data_layer.obj : error LNK2019: unresolved external symbol “class boost::shared_ptr<class caffe::Dataset<class std::basic_string<char,struct std::char_traits,class std::allocator >,class caffe::Datum,struct caffe::dataset_internal::DefaultCoder<class std::basic_string<char,struct std::char_traits,class std::allocator > >,struct caffe::dataset_internal::DefaultCoder > > __cdecl caffe::DatasetFactory<class std::basic_string<char,struct std::char_traits,class std::allocator >,class caffe::Datum>(enum caffe::DataParameter_DB const &)”

1) `InstallFailureSignalHandler` can be commented out in `common.cpp`.

2) The linking problem is probably caused by not having boost library or not compiling all files in `caffe/src/caffe` folder.

It seems that you are using 9c39b93, which is my attempt to merge from caffe::dev. Yet some static lining problem happened afterwords. Please try later commits, e.g. 2a9acc2.

The code has worked. Thanks!

hello,i just met the same problem with yours, can you describe the details how you solved this problem, thank you

hi, how do you solve this problem, i met the same problems. thank you

Can I build and test it on the windows 8, Core-i7 x64 without nVIDIA card?

Yes, I think so. Just no need to add any .cu files and set Caffe mode to CPU before you create a Net in your code.

Thanks for you reply. How can I set the Caffe mode to CPU?

An example is roughly like this:

// Set CPU

Caffe::set_mode(Caffe::CPU);

// Set to TEST Phase

Caffe::set_phase(Caffe::TEST);

// Load net

Net net(“deploy.prototxt”);

// Load pre-trained net (binary proto)

net.CopyTrainedLayersFrom(“trained_model.caffemodel”);

Thank you very much for this! I was able to build and run caffe with VS2013 following your detailed instructions! Everything else that I tried previously has failed.

have you managed to build the python and Matlab wrappers?

I want to use them too,if you build them,plesae tell me how,thank you very much.

@Sepehr for matlab wrapper do the following step,

1) In tools Drag matlab/caffe/matcaffe.cpp file into Visual Studio’s Source Files.

2) C/C++ Add include path of matlab to Additional Include Directories in my case “C:\Program Files\MATLAB\R2011b\extern\include”

3) Add matlab lib path to Additional Library Directories in Configuration Properties -> Linker -> General: In my case “C:\Program Files\MATLAB\R2011b\extern\lib\win64\microsoft”.

4) Configuration Properties -> General set Target Extension to “.mexw64” and configuration type “Dynamic Library (.dll)”

5) under “Additional Dependencies” add libmx.lib; libmex.lib; libmat.lib;

Hi Neil,

I have almost successfully installed caffe following your instruction, only failed at step 14. When I was building caffe, I got “fatal error LNK1104: cannot open file ‘libboost_date_time-vc120-mt-gd-1_56.lib'”. But I used boost_1_57_0 in my project thoroughly and everything else worked well, it’s so strange that the project calls for a library in boost_1_56_0, could you help me?

It’s not weird because the call for boost libs lies in the header files of the boost. In order to use boost 1.57, you have to replace the boost header files that you use in your project.

I have been using the header files of the boost 1.57 in my project, which means I am using the path of boost 1.57 in Additional Include Directories.

Could you articulate this in detail? It is the header files of boost 1.57 I have been using in my project.

“boost/version.hpp” specifies the version of boost. e.g. #define BOOST_LIB_VERSION “1_56”

I had the same problem and solved by replacing boost 1.57 with 1.56 in my project.

I think the pre-built libraries use the boost 1.56.

You may install boost 1.56 to solve it.

Thanks for your reply, I have solved the problem via the same way.

I had the same problem, but I used boost_1_58_0 in my project. This problem is caused by the leveldb.lib. This lib file needed to be prebuilt by using boost_1_57_0 if you want to use the new version boost_1_57_0. I guess that Neil Shao’s leveldb.lib was probably prebuilt by using boost_1_56_0.

I had build and test it on the windows 8, Core-i7 x64 without CUDA mode.

Thanks for your help.

I have the new problem.

I test the net in debug and release mode.

In the debug mode, I got the valid Test net output in first iteration.

But in the release mode, I got the invalid test net loss output.

I0302 17:09:29.914144 9944 solver.cpp:320] Test net output #0: accuracy = 0.0128

I0302 17:09:29.926195 9944 solver.cpp:320] Test net output #1: loss = -1.#QNAN (* 1 = -1.#QNAN loss)

What’s different with debug and release mode?

i bulid the lmdb as you said

but there is on error.

>mdb.c(8767): error C2440: “函数”: 无法从“DWORD (__cdecl *)(void *)”转换为“LPTHREAD_START_ROUTINE”

I used your 3rdparty.

I test a simple leveldb c++ code

#include “leveldb/db.h”

#include “leveldb/write_batch.h”

#include

int main()

{

leveldb::DB* db;

leveldb::Options options;

return 0;

}

but i get error like this

error LNK2019: unresolved external symbol public: __thiscall leveldb::Options::Options(void)” (??0Options@leveldb@@QAE@XZ) referenced in function _main

what’ wrong?

i am using windows8.1 + vs2013 +64bit

Thank you very much!

Linking error is usually caused by missing implementation of declared functions. Did you add the leveldb’s lib into linking input?

Yes, I have add leveldb’s lib into linking input.

I find the same problem in stackoverflow

http://stackoverflow.com/questions/9244670/leveldb-example-not-working-on-windows-error-lnk2029

but the link in the answer is broken.

I have no idea what to do..

I’m not sure, but looks like the missing part is “Options”. You may try to add you cpp file into the leveldb project and compile them together.

I’m getting the following, when running the batch file:

C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\V120\Microsoft.CppCommon.targets(122,5): error MSB3073: The command ““../scripts/GeneratePB.bat”

C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\V120\Microsoft.CppCommon.targets(122,5): error MSB3073: :VCEnd” exited with code 9009.

It seems it can’t find this:

../src/caffe/proto/caffe_pretty_print.proto: No such file or directory

You can modify the path yourself of course.

What I mean is that in /src/caffe/proto/ there is only a caffe.proto file,

and the caffe_pretty_print.proto is missing.

Is the latter necessary?

You may find it here https://github.com/initialneil/caffe/tree/windows/src/caffe/proto.

Caffe of the latest version has got rid of caffe_pretty_print.proto, thus just delete the line which compling caffe_pretty_print.proto in GeneratePB.bat.

Is there any way to solve?

Do you know where we can find the logs that are produced during training?

Found it in

C:\Users\\AppData\Local\Temp

in Windows 7

That is

C:\Users\userName\AppData\Local\Temp

Have any of you encountered memory-hog problems? As the iterations increase, ram usage goes up as if there is a memory leak.

I am currently in iteration 6320 training bvlc’s googlenet on a smaller dataset in leveldb format, and I am using 15 out of the 16 GB of ram. However caffe.exe in the task manager is shown to use ~ 170MB.

I am using batchsizes 8 and 5 for train,test respectively and running in gpu mode on a 1GB memory GPU.

I found the same problem.

There is a similar problem for LMDB dataset(helped by Kazukuni Hosoi’s comment). I solved it by just adding following lines at the top of LMDBCursor::Seek method. It releases memory mapped pages which are not used after current seek.

if (op != MDB_FIRST)

VirtualUnlock(mdb_value_.mv_data, mdb_value_.mv_size);

I think LevelDB case could be solved similar.

hi,

I have completed all steps successfully except in final step running “train_lenet.bat” i got error “The program can’t start opencv_core249.dll is missing from your computer.” Although opencv_core249.dll is included and it is build successfully.

Only .h and .lib files are needed for compilation. The .dll files are needed during run time. You can either add the folder of OpenCV’s dll to your system path then reboot, or just copy needed dll files to the folder of the compiled exe.

Hi, Neil. I got strange problems. I followed exactly your steps when building OpenCV gpu module with VS2010 and CUDA 6.5. I successfully compiled 2.4.9 with Debug mode and 2.4.10 with release mode. But failed 2.4.9release/2.4.10 debug. Errors look like:

CMake Error at modules/highgui/cmake_install.cmake:42 (file):

48> file INSTALL cannot find

48> “D:/MyProgram/opencv-2.4.9/gpu_build/bin/Release/opencv_highgui249.dll”.

48> Call Stack (most recent call first):

48> modules/cmake_install.cmake:60 (include)

48> cmake_install.cmake:105 (include)

48>

48>

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: The command “setlocal

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: “C:\Program Files (x86)\CMake\bin\cmake.exe” -DBUILD_TYPE=Release -P cmake_install.cmake

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: if %errorlevel% neq 0 goto :cmEnd

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: :cmEnd

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: endlocal & call :cmErrorLevel %errorlevel% & goto :cmDone

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: :cmErrorLevel

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: exit /b %1

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: :cmDone

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: if %errorlevel% neq 0 goto :VCEnd

48>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\Microsoft.CppCommon.targets(113,5): error MSB3073: :VCEnd” exited with code 1.

48>

48>Build FAILED.

Very strange..

Thanks.

Could you help with the compilation of python and matlab wrapper of caffe , I don’t know what to do with it in windows platform,expect your reply

Firstly, thanks for this very detailed and useful tutorial. I managed (or I think I did) through all the steps of compilation but I finally have an error when running create_mnist.bat (modified with leveldb format). I have only the following message : “The application was unable to start correctly (0xc000007b) “. I downloaded the leveldb-formatted mnist dataset from niuzhiheng’s GitHub to try launching the training but the same error occurs when running train_lenet.bat. I have no idea where it can come from.

Hoping someone can help. Thanks in advance!

Regards,

Stephane

I think good practice is to drag the caffe.cpp file in ‘tools’ folder to you visual studio project. So that you can run caffe in VS. Debugging will be easier comparing to running an exe.

Thanks for your answer but it still does not work. Sorry, I don’t know how to run caffe with VS debugging, it is always the exe that runs even though the solution is in debug mode. When the exe crashes, it does not enter the code, it just stops. Sorry for the inconvenience, it seems to be a basic Visual Studio problem and not a problem coming from the tutorial. So frustating when close to the end of the tutorial ;-).

Running caffe with VS debugging, just see here http://stackoverflow.com/questions/3697299/passing-command-line-arguments-in-visual-studio-2010 and here https://msdn.microsoft.com/en-us/library/cs8hbt1w(v=vs.90).aspx

but when i begin debugging in vs. this error ‘The application was unable to start correctly (0xc000007b) ‘ still coms out. how to fix this? thank you very much

I have the same error. Did you find how to fix this? Thank you vey much

At least, I am not the only one with this error ;-). Sorry but unfortunately, I didn’t fix it. I am still working on it but I have no idea at all for the moment. I don’t understand what is the entry point in the application because when I put breakpoints in Debug mode in the main() function of caffe.cpp or convert_mnist_data.cpp, they are not attained.

If you fix it. can you tell me please? Thank you

Hello, I finally got it working!

My problem was very specific to my config I think, I had some conflicts with dlls in 32-bit format whereas 64-bit is required. The dll in my case seemed to be libgfortran-3.dll. I reinstalled the 64-bit version of the application using this dll to fix the problem.

I used dependencyWalker to find this, maybe you can try it also to check the conflicts between 32-bit and 64-bit dlls used by caffe.exe.

Hope it helps.

Regards,

Stephane

Hello Stephane,

I have same problem with libgfortran-3.dll.

Did you reinstalled the openblas to resolve the problem?

Hello,

I used the openblas version provided in the tutorial and just copied the dlls in the bin directory as indicated. I think it worked correctly as I did not have to reinstall it. libgfortran-3.dll is also present in MinGW so be sure to have the correct version (64-bit).

For information, in my case, the problem came from the anaconda distribution that I use for Python development, it was in 32-bit version and it was this libgfortran-3 file called by caffe.exe.

Hope it helps.

Regards,

Stephane

我想你是中国学生吧,我也是,我刚解决了这个问题。你下载“libquadmath-0.dll” “libgcc_s_sjlj-1.dll” “libgfortran-3.dll”这三个dll放到生成的可执行文件的目录就可以了。具体参考这篇博客http://blog.csdn.net/lien0906/article/details/44221355

祝好!

Dear Stephane Zieba

Thank you very very very much. Your are my hero. I have fixed it!!!!!! I put three dlls in caffe/bin “libquadmath-0.dll” “libgcc_s_sjlj-1.dll” “libgfortran-3.dll” and it worked.

I have the same problem, missing “libquadmath-0.dll” “libgcc_s_sjlj-1.dll” “libgfortran-3.dll”

can you send me? isr.nadav@gmail.com

>> You have to have a GPU on your PC lol

I do not have a GPU on my system. CUDA 6.5 installed fine and Visual Studio is recognizing it. And at least at the start, I am happy to deal with 100x running times sans GPU acceleration. Do you think I stand a chance without a GPU? 🙂 Or is upgrading my desktop for adding a suitable GPU card is the only option?

shouldn’t CUDA be needed only if you have a NVIDIA GPU in the first place? What if you have an integrated graphics card (such as on laptop)? Will caffe not run on such a system? I have a laptop with Intel HD Graphics 3000. Is this machine not capable of running caffe?

I got this message while trying to install CUDA – The graphics driver could not find compatible graphics hardware. You may continue installation, but you will not be able to run CUDA applications.

GPU version Caffe requires NVidia graphic card and CUDA. For your computer you can use CPU version. If you don’t have an NVidia Graphic card on your PC, of course you don’t need to install CUDA, which will not work any way.

hi

Thanks for you help. I am stuck in training. I have generate mnist_train_leveldb and mnist_test_leveldb. And modified caffe/examples/mnist/lenet_train_test.prototxt, substituted “lmdb” with “leveldb”. when i do train i got error.

snapshot_prefix: “examples/mnist/lenet”

solver_mode: CPU

net: “examples/mnist/lenet_train_test.prototxt”

I0322 17:47:03.442919 4880 solver.cpp:70] Creating training net from net file:

examples/mnist/lenet_train_test.prototxt

[libprotobuf ERROR ..\src\google\protobuf\text_format.cc:274] Error parsing text

-format caffe.NetParameter: 17:3: Unknown enumeration value of “leveldb” for fie

ld “backend”.

F0322 17:47:03.445919 4880 upgrade_proto.cpp:928] Check failed: ReadProtoFromTe

xtFile(param_file, param) Failed to parse NetParameter file: examples/mnist/lene

t_train_test.prototxt

*** Check failure stack trace: ***

I cannot test it right now. Just guessing that maybe you should write LEVELDB in upper case. You can find in caffe.proto:

message DataParameter {

enum DB {

LEVELDB = 0;

LMDB = 1;

}

Thanks for all of this.But could you please help with the compilation of the python and matlab wrappers , ’cause I don’t know what to do with them.Expect your detailed help with them.Thanks.

Sorry but I don’t have Matlab at hand and I don’t use Python in Windows. I think if you use Python and Matlab instead of Visual Studio you should do that in Ubuntu.

Because workstations in our lab doesn’t connect to the internet, it’s very tivial to use linux, so do you have some further development to replace the visualization in python wrapper.

Sure. I’ll try to figure out and come up with some tutorials. But please keep in mind that Caffe’s source code is actually not difficult to understand if you step by step debug it in Visual Studio.

Thanks @Neil Shao Training done! Accuracy 0.9908 after 10000 iteration.

Hi,

I have trained LeNet MNIST & CIFAR- 10 , But i am unable to train” VOC2012″ with caffe.

Hoping someone can help. Thanks in advance!

In io.cpp.

I add

#ifdef _MSC_VER

#define open _open

#define close _close

#endif

at the beginning of the file.

but i still get the error at function ReadFileToDatum ” file.close();”

how to fix this? thank you

It’s because “file.close()” is changed to “file._close()” by the macro. Easy solution is to delete the macro regarding “close”, and modify “close()” to “_close()” manually except the “file.close()”.

how to fix “file.close()” error? comment out it? Thank you very much!

“file.close()” itself is not an error. It is “file._close()” that cause error. So if you delete “#define close _close”, the “file.close()” will be just fine.

Hi, it is nice work!

But , why do not you use nuget in VS ?

It is quite easy to install OpenCV and Boost.

Hi,

I followed your great tutorial, and successfully created caffe.exe file in /caffe/bin folder, but when i run “C:\caffe\bin>caffe.exe” in windows cmd. The program crashed. ‘The application was unable to start correctly (0xc000007b)’

How can I fix this? Thank you very much

Hello,

I have this exact same problem. I have been struggling with this for days. Have you been able to get around this error? I get the 0xc000007b error also.

Thanks,

Bonzi

According to some comments below, maybe you are using 32 bit dll files of libgcc_s_seh-1.dll, libgcc_s_sjlj-1.dll, libgfortran-3.dll, libquadmath-0.dll. Please try to change them with 64 bit ones.

hi,

Thanks for this tutorial, it helped me a lot i have run some examples they were successful. Now I am training image net but i am getting error when running “create_imagenet.sh”

I0327 22:49:42.529912 5664 convert_imageset.cpp:86] A total of 0 images.

F0327 22:49:42.529912 5664 db.cpp:30] Check failed: _mkdir(source.c_str()) == 0 (-1 vs. 0) mkdir data/ilsvrc12/val.txtfailed

How can i fixed this. Thanks in advance!

I had that error and figured that something was wrong with my paths specified.

Here’s working, at least for me example of .bat file

set ROOTFOLDER=E:\Data\MNIST\

set FILES=E:\Data\MNIST\test_dr.txt

set DATA=E:\Data\MNIST\MNIST_TEST_

set BINARIES=G:\Ilya\Projects\caffe-master\bin\

cd %ROOTFOLDER%

set BACKEND=leveldb

rd /s /q “%DATA%%BACKEND%”

echo “Creating %BACKEND%…”

“%BINARIES%convert_images.exe” %ROOTFOLDER% %FILES% %DATA%%BACKEND% –backend=%BACKEND%

echo “Done.”

pause

Hi,

I solved my problem, Problem was my output folder name contain space i used tab sequence to avoid but some how unable to create dir so i renamed folder and removed space, it worked.

Hi, I followed your detailed steps and finally make it work. But, the precision on MNIST I got was only 0.13. Do you know why? Thanks a lot.

Hello! Thank you for excellent guide. I was able to build ‘convert_images’ and ‘caffe’. But didnt find any documentation about using trained net so I decided to build Python module. Getting through it I still, after days wasn’t able to solve such a error:

caffe.obj : error LNK2001: unresolved external symbol “__declspec(dllimport) struct _object * __cdecl boost::python::detail::init_module(struct PyModuleDef &,void (__cdecl*)(void))” (__imp_?init_module@detail@python@boost@@YAPEAU_object@@AEAUPyModuleDef@@P6AXXZ@Z)

1>G:\Ilya\Projects\caffe-master\bin\caffe.exe : fatal error LNK1120: 1 unresolved externals

I think I added everything that I could about boost in Additional Include Directories and Additional Library Directories…

Could you advise something?

Hello. I did not use a trained network for the moment but did you try running caffe.exe with “test” instead of “train” as an argument of the command line? According to the line 142 of caffe.cpp, two other files about the network must be provided: model and weights. Maybe they are the files generated during training (with extensions .caffemodel and .solverstate). if someone can confirm this, this would be great.

Thanks for the hint, I tried it today.

Yes, it works.

For running it you need two files:

caffe.exe test -model E:\Data\MNIST\lenet_train_test.prototxt -weights E:\Data\MNIST\lenet_iter_10000.caffemodel -iterations 1

*.prototxt – config of your trained net, *.caffemodel – weights(this file obtained during training)

Cant understand though connection and need between number of specified iterations and batch size in net config.

Managed to build _caffe for python. But after copying .dll | .lib still getting error in __init__.py in pycaffe.py

from ._caffe import Net, SGDSolver

ImportError: No module named ‘caffe._caffe’

Trying to solve it..

Hello,

I’m trying to build python wrapper to test network on my custom dataset following this method: “http://nbviewer.ipython.org/github/BVLC/caffe/blob/master/examples/classification.ipynb” . How did you build _caffe for python? I don’ know how to use “make pycaffe” under windows.

Thanks in advance for your help.

Stephane

I created new project in Visual Studio(dll type), added everything as mentioned above, just copied it from previous project where we build caffe.cpp and other tools. .cpp and .h files shloud be already changed according to this blog, so you need just add them in project(for folders layers and others do not forget to change “Build Customization”).

I have Anaconda with Python 3.4, so I added essential dependencies for python and numpy(I can write explicitly what dependencies when Ill get to my work PC, but you can figure it out by looking at errors building project). Also you need build Boost 1.56(prebuild didnt work in this case) and separatly Boost.Python.

I ran into this same problem, and it turns out that this error occurs when python can’t find the _caffe extension itself (which is the obvious interpretation), but that it can also occur when Windows can’t find some of the dependencies for the _caffe extension. The first thing to try is to rename _caffe.dll to _caffe.pyd (since python only imports files with the pyd extension) and then to make sure that _caffe.pyd is in your PYTHONPATH (for example, add it to your site_packages directory). You can find the directories in your PYTHONPATH from within python with:

import sys

print sys.path

If that doesn’t work, then use Dependency Walker to find out if the module is having trouble loading any of it’s dependencies (http://www.dependencywalker.com/). Just use dependency walker to open the caffe.pyd file that you just moved. In my case, I had forgotten to add libglog.dll and libopenblas.dll to my Windows PATH.

After that, you will probably also run into a problem with protobuf. Namely, you won’t have the caffe.proto submodule. In order to make this, you have to put protoc.exe in your path and then cd to the caffe directory and use these commands:

mkdir -p python/caffe/proto && \

touch python/caffe/proto/__init__.py && \

protoc –proto_path=src/caffe/proto –python_out=python/caffe/proto src/caffe/proto/caffe.proto && \

protoc –proto_path=src/caffe/proto –python_out=python/caffe/proto src/caffe/proto/caffe_pretty_print.proto

This will give you caffe.proto, which you won’t get when building _caffe.dll in VS. Finally, if you get an “unexpected keyword argument” error when loading protobuf, this is likely from using protobuf 3.0. Use protobuf 2.6 instead (recompile _caffe.dll with the protobuf 2.6 libs as well). Hope that helps!

Dave

WOW, how did u manage to build _caffe for python? By putting _caffe.cpp into the project? I have no idea what i should do using windows…

Hi Ilya, do you remember, how did you solve the problem with the unresolved external symbol “__declspec(dllimport) struct _object * __cdecl boost::python::detail::init_module

…”

?

Every thing works fine except the following error. Please help

error MSB3191: Unable to create directory “C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v6.5\lib\x64 $C:\Users\dan\Documents\Utilities\opencv\build\x64\vc12\lib $C:\local\boost_1_56_0”. The given path’s format is not supported.

How did this happened? Maybe the $ is not recognized and you need the ” ; ” between different path.

Thanks for your reply Neil. I deleted “$” and add “; “in linker and found the following error:

Error 109 error LNK1104: cannot open file ‘(CUDA_PATH_V6_5)\lib\,x64 ;C:\Users\dan\Documents\Utilities\opencv\build\x64\vc12\lib ;C:\local\boost_1_56_0\lib64-msvc-12.0$’ C:\Users\dan\Documents\caffe-master\caffe\caffe\LINK caffe

$(CUDA_PATH_V6_5) is the path of CUDA, don’t delete the $. For the rest just have to make the path reasonable.

Yes

I am doing the one mentioned in step 6.

I am configuring the linker in following way: $(CUDA_PATH_V6_5)\lib\$(PlatformName) $(OPENCV_X64_VS2013_2_4_9)\lib $(BOOST_1_56_0)\lib64-msvc-12.0

But still the error is there

error MSB3191: Unable to create directory “C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v6.5\lib\x64 C:\Users\dan\Documents\Utilities\opencv\build\lib C:\local\boost_1_56_0”. The given path’s format is not supported.

The ; is needed.

$(CUDA_PATH_V6_5)\lib\$(PlatformName);$(OPENCV_X64_VS2013_2_4_9)\lib; $(BOOST_1_56_0)\lib64-msvc-12.0;

After fixing that I have another error

error MSB4018: The “VCMessage” task failed unexpectedly.

System.FormatException: Index (zero based) must be greater than or equal to zero and less than the size of the argument list.

at System.Text.StringBuilder.AppendFormat(IFormatProvider provider, String format, Object[] args)

at System.String.Format(IFormatProvider provider, String format, Object[] args)

at Microsoft.Build.Shared.ResourceUtilities.FormatString(String unformatted, Object[] args)

at Microsoft.Build.Utilities.TaskLoggingHelper.FormatString(String unformatted, Object[] args)

at Microsoft.Build.Utilities.TaskLoggingHelper.FormatResourceString(String resourceName, Object[] args)

at Microsoft.Build.Utilities.TaskLoggingHelper.LogWarningWithCodeFromResources(String messageResourceName, Object[] messageArgs)

at Microsoft.Build.CPPTasks.VCMessage.Execute()

at Microsoft.Build.BackEnd.TaskExecutionHost.Microsoft.Build.BackEnd.ITaskExecutionHost.Execute()

at Microsoft.Build.BackEnd.TaskBuilder.d__20.MoveNext()

Hi, Neil! I succeeded in making LMDB to work in your project.

In cast you are still interested in it, here is what I did.

1. Get rid of lmdb.lib from the project.

2. Change the character set from “Unicode” to “Multi-byte” in “Property” of the project.

It was necessary in my environment of Windows-7(Language is Japanese) to make the file path appropriate.

3. Add mdb.c and midl.c into your project.

4. Change the following lines in “mdb.c”.

(1) Add the two lines at the top of the file.

#pragma warning( disable : 4996 )

#pragma warning( disable : 4146 )

(2) Cast is necessary for the 2nd argument in THREAD_CREATE().

#if defined(_MSC_VER)

THREAD_CREATE(thr, (LPTHREAD_START_ROUTINE)mdb_env_copythr, &my);

#else

THREAD_CREATE(thr, mdb_env_copythr, &my);

#endif

(3) In order to make the file path appropriate on Windows, you should use double backslashes instead of a slash.

#if defined(_MSC_VER)

/** The name of the lock file in the DB environment */

#define LOCKNAME “\\lock.mdb”

/** The name of the data file in the DB environment */

#define DATANAME “\\data.mdb”

/** The suffix of the lock file when no subdir is used */

#else

/** The name of the lock file in the DB environment */

#define LOCKNAME “/lock.mdb”

/** The name of the data file in the DB environment */

#define DATANAME “/data.mdb”

/** The suffix of the lock file when no subdir is used */

#endif

(4) In order to truncate the file size at the end of the process, you should add a line in “mdb_env_close0(MDB_env *env, int excl)”.

if (env->me_fd != INVALID_HANDLE_VALUE)

{

#if defined(_MSC_VER)

// Truncate the file size.

SetEndOfFile(env->me_fd);

#endif

(void)close(env->me_fd);

}

5. Change the following lines in “convert_mnist_data.cpp”.

(1) It is only for convenience to enforce overwriting the directory if it already exists.

#if defined(_MSC_VER)

if (0 != _mkdir(db_path))

{

std::string command(“rd /s /q “); // Enforce to remove the directory.

command.append(db_path);

system(command.c_str());

CHECK_EQ(_mkdir(db_path), 0) << "mkdir " << db_path << "failed";

}

#else

CHECK_EQ(mkdir(db_path), 0) << "mkdir " << db_path << "failed";

#endif

(2) Since LMDB temporarily makes a file whose size equals to "mapSize", 1TB was too large for my PC.

If I made it 1GB or 40GB, mdb_env_open(mdb_env, db_path, 0, 0664) was successful.

In "lmdb.h", there is a comment which says "The size should be a multiple of the OS page size".

Therefore, I made a change as follows:

#if defined(_MSC_VER)

SYSTEM_INFO si;

GetSystemInfo(&si);

const size_t mapSize = static_cast(si.dwPageSize) * 300000; // 1GB : OK

//const size_t mapSize = static_cast(si.dwPageSize) * 10000000; // 40GB : OK

CHECK_EQ(mdb_env_set_mapsize(mdb_env, mapSize), MDB_SUCCESS) // 1GB

<< "mdb_env_set_mapsize failed";

#else

CHECK_EQ(mdb_env_set_mapsize(mdb_env, 1099511627776), MDB_SUCCESS) // 1TB

<< "mdb_env_set_mapsize failed";

#endif

(3) Add the following lines prior to "delete pixels". It is to make the file size as small as possible.

Otherwise, the file size might be "mapSize".

#if defined(_MSC_VER)

else

{

if (db_backend == "lmdb")

{ // Truncate and close the file.

mdb_close(mdb_env, mdb_dbi);

mdb_env_close(mdb_env);

}

}

#endif

delete pixels;

These are all I remember now, although I could be missing something.

If you still have any problems, do not hesitate to ask me.

Thank you Kazukuni! It’s very helpful!

Any suggestions?

error LNK1104: cannot open file ‘libopenblas.dll.a’

Brothers, I have some troubles. After compile the caffe ,I have 631 link errors like this

:1>solver.obj : error LNK2019: 无法解析的外部符号 “public: virtual __cdecl caffe::SolverParameter::~SolverParameter(void)” (??1SolverParameter@caffe@@UEAA@XZ),该符号在函数 “public: __cdecl caffe::Solver::Solver(class std::basic_string<char,struct std::char_traits,class std::allocator > const &)” (??0?$Solver@M@caffe@@QEAA@AEBV?$basic_string@DU?$char_traits@D@std@@V?$allocator@D@2@@std@@@Z) 中被引用

, How can I solve them ?

Best Wishes

Thank you very much~

Hi,Neil Shao

Now I have 9 error Link errors like this:错误 15 error LNK2019: 无法解析的外部符号 “void __cdecl caffe::WriteProtoToBinaryFile(class google::protobuf::Message const &,char const *)” (?WriteProtoToBinaryFile@caffe@@YAXAEBVMessage@protobuf@google@@PEBD@Z),该符号在函数 “protected: void __cdecl caffe::Solver::Snapshot(void)” (?Snapshot@?$Solver@M@caffe@@IEAAXXZ) 中被引用 H:\CAFFEnew\caffe\caffe\solver.obj . I have add all the include directions and dependencyies.

I have solve it by myself,thanks .

best wishes for you.

多谢了~

By how?

Hello Neil, Thank you very much for your useful guide.

Two errors occured when I tried to compile the code in CPU_ONLY mode. I am using visual studio 12 and I entered CPU_ONLY in preprocessor definitions to run in CPU_ONLY mode. I didn’t add the .cu files for this mode

\caffe\src\caffe\syncedmem.cpp(93): error C4716: ‘caffe::SyncedMemory::gpu_data’ : must return a value

\caffe\src\caffe\syncedmem.cpp(109): error C4716: ‘caffe::SyncedMemory::mutable_gpu_data’ : must return a value

I could compile the codes for GPU but as soon as I go to the CPU_ONLY mode I encounter these errors. I would appreciate your help.

Also would you please elaborate on your following reply? where do you enter these commands?

An example is roughly like this:

// Set CPU

Caffe::set_mode(Caffe::CPU);

// Set to TEST Phase

Caffe::set_phase(Caffe::TEST);

// Load net

Net net(“deploy.prototxt”);

// Load pre-trained net (binary proto)

net.CopyTrainedLayersFrom(“trained_model.caffemodel”);

Seems like you need to look into the 93 and 109 line of `syncedmem.cpp` and make small changes to hack it.

I put a “return 0;” at the end of #else sections of the two error methods and the code compiled.

Neil: Would you please tell me where I put these:

// Set CPU

Caffe::set_mode(Caffe::CPU);

// Set to TEST Phase

Caffe::set_phase(Caffe::TEST);

// Load net

Net net(“deploy.prototxt”);

// Load pre-trained net (binary proto)

net.CopyTrainedLayersFrom(“trained_model.caffemodel”);

Sorry for the possibly obvious question.

Thank your share! There are some additional cpp files in tool folder to build, such as, extract_features.cpp, finetune_net.cpp, and so on. If you can share how to modify and build these cpp files in the windows, it is very great!

Thanks for your contribution. I find an error when I run your shared code. When I changed the pooling size of the second pool lay from 3 to 1 with cifar10_quick net, some error happened like “loss = -1.#QNAN”, and when I change the square convelusiton map to 1 by n, similar errors happens too. Can you help to check the problem?

The”loss = -1″ problem happened to me before. I fixed by changing learning rate smaller.

Yes. I tried to set momentum smaller, it also works. However, when the layer of the net is deep enough, only change these two parameters can not fix this problem. I find the value of some neures are Inf in the process of interation, but I have not find out why. The old version released by Niuzhiheng don’t have this problem. Thus, if you have time, you can have a look at it. Thanks.

Hi, great work getting caffe to run on windows.

Is it possible to distribute the pre-compiled caffe binary built from your instructions? Would this be possible? It’d definitely save me the trouble from building it from scratch. If not then I’d have to give this a go and try my luck.

Thanks.

Thanks for this, you saved us a bunch of time.

Hi! To compile the current db.cpp you also need to add the workaround:

#include

#ifndef _MSC_VER

CHECK_EQ(mkdir(source.c_str(), 0744), 0) << "mkdir " << source << "failed";

#else

CHECK_EQ(_mkdir(source.c_str()), 0) << "mkdir " << source << "failed";

#endif

I meant:

#include “direct.h”

I have two GPU,how can I modify the GPU I would use?

cd ../../”bin/caffe.exe” train –solver=examples/mnist/lenet_solver.prototxt -gpu 1 pause

can it work?

Could you please look into the following issue? I compiled Caffe on win7, VS 2013 successfuly with your guide, and i did train lenet and my own model with it as well. But when it comes to using trained models for prediction, i’m in trouble:

Training goes as expected with such prototxt:

name: “FACES”

layer {

name: “data”

type: “Data”

top: “data”

top: “label”

include {

phase: TRAIN

}

data_param {

source: “examples/_faces/trainldb”

batch_size: 155

backend: LEVELDB

}

}

layer {

name: “data”

type: “Data”

top: “data”

top: “label”

include {

phase: TEST

}

data_param {

source: “examples/_faces/testldb”

batch_size: 45

backend: LEVELDB

}

}

layer {

name: “conv1”

type: “Convolution”

bottom: “data”

top: “conv1”

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 12

kernel_size: 13

stride: 2

weight_filler {

type: “gaussian” # initialize the filters from a Gaussian

std: 0.01 # distribution with stdev 0.01 (default mean: 0)

}

bias_filler {

type: “constant”

value: 0

}

}

}

….

layer {

name: “accuracy”

type: “Accuracy”

bottom: “ip2”

bottom: “label”

top: “accuracy”

include {

phase: TEST

}

}

layer {

name: “loss”

type: “SoftmaxWithLoss”

bottom: “ip2”

bottom: “label”

top: “loss”

}

but when i’m trying to load with model for making predictions with such prototxt:

name: “FACES”

input: “data”

input_dim: 1

input_dim: 3

input_dim: 150

input_dim: 150

layer {

name: “conv1”

type: “Convolution”

bottom: “data”

top: “conv1”

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 12

kernel_size: 13

stride: 2

}

}

….

layer {

name: “prob”

type: “Softmax”

bottom: “ip2”

top: “prob”

}

It breaks with target_blobs.size() == source_layer_blobs.size() (2 vs. 0) – Incompatible number of blobs for layer 1

but i can’t see a mistake on my side. and it also matches simillar setup for caffenet.

my initialization code is pretty simple:

#include

#include

#include

#include

#include

#include “boost/algorithm/string.hpp”

#include “caffe/caffe.hpp”

using caffe::Blob;

using caffe::Caffe;

using caffe::Net;

using caffe::Layer;

using caffe::shared_ptr;

using caffe::Timer;

using caffe::vector;

int main(int argc, char** argv) {

Caffe::set_mode(Caffe::CPU);

Net* net;

net = new Net(“azoft_faces.prototxt”, TEST);

net->CopyTrainedLayersFrom(“azf3_iter_100.caffemodel”);

return 1;

}

Same error also occurs with mnist example. It has trained succesfully, but when i try to load resulting model with lenet.prototxt for recognition, it throws an exception “target_blobs.size() == source_layer_blobs.size() (2 vs. 0) – Incompatible number of blobs for layer conv1”.

I think it’s because your second protxt doesn’t have para for data later.

The source of data layer can be a text file that contain the list of testing images(full path), or LMDB file.

The type of data layer can also be memory_ data.

Thank you for such quick reply, Neil. I did already try replacing

“input: “data”

input_dim: 1

input_dim: 3

input_dim: 150

input_dim: 150”

with

“layer {

name: “data”

type: “MemoryData”

top: “data”

top: “label” //doesn’t make sense here for me, but if i omit it, i get CHECK_EQ(ExactNumTopBlobs(), top.size()) assert

memory_data_param {

batch_size: 1

channels: 1

height: 150

width: 150

}

}”

but i get exactly the same error. i’m not sure it is correct, though, there isn’t much info on memorydata layers.

Hi. You need to make sure data layer has data before forward propagation.

If the type is data, the net will read from file when created.

If the type is memory_data, you need to pass data in your program.

“Hi. You need to make sure data layer has data before forward propagation.

If the type is memory_data, you need to pass data in your program.”

i don’t do propagation yet. it gives me error then i’m just trying to load trained model.

layer {

name: “data”

type: “ImageData”

top: “data”

top: “label”

image_data_param {

batch_size: 1

source: “C:/caffe/examples/_faces/test_image.txt”

}

}

this also results in “target_blobs.size() == source_layer_blobs.size() (2 vs. 0) – Incompatible number of blobs for layer conv1” my version of caffe(latest one) did not compile correctly. do you use protov1(“layers”, “IMAGE_DATA”) or protov2(“layer”, “ImageData”)?

I had to correct it to for it to be parsed correctly:

layer {

name: “data”

type: “Data”

top: “data”

top: “label”

data_param {

source: “examples/_faces/testldb”

batch_size: 45

backend: LEVELDB

}

}

and… it still gives the same error. furthemore, i have to use either basic input or memory data for my application. i think, something went wrong in my project.

if you will have some time, please take a look into minimal example(solution files + source):

dropbox. com/s/0jyld3lpiaky2we/caffe_wtf.7z?dl=0

Thank you.

It was O_RDONLY | O_BINARY flag in io.cpp … Now every combination i tried doesn’t crash anymore.

Helo

Thank you for the tutorial. The project can be built without error. But i have a problem :

Each time there is a function from glog, the program crash.

For example when i go in the device_query() function:

check failed: FLAGS_gpu > -1 (-1 vs. -1) Need a device ID to query.

*** Check failure stack trace: ***

it fails comparing -1 with -1 ??

I don’t understand what GFlags + GLog + ProtoBuf + LevelDB are. I think i did something wrong during this step of your tutorial.

Can you help me?

It seem to me that you need to either set the mode to GPU and set device (e.g. id set to 0), or set the mode to CPU.

I don’t think that the value of FLAGS_gpu is a problem. I have a problem about every CHECK. The flags_gpu was an example.

When i use your quick test on mnist, i have a similar problem

Flags_model.size() > 0 (0 vs. 0). Need a model definition to score.

I think that something is wrong with my installation.

Ok my question is not relevant. You are right the problem comes from the value of the flags.

I don’t understand how it work. I have to set all the flags manualy? Is there any initialisation?

I got caffe.ext

but

The DependencyWalker reports that the caffe.exe is missing six Windows 8.1 system DLLs:

API-MS-WIN-CORE-KERNEL32-PRIVATE-L1-1-1.DLL

API-MS-WIN-CORE-PRIVATEPROFILE-L1-1-1.DLL

API-MS-WIN-SERVICE-PRIVATE-L1-1-1.DLL

API-MS-WIN-CORE-SHUTDOWN-L1-1-1.DLL

EXT-MS-WIN-NTUSER-UICONTEXT-EXT-L1-1-0.DLL

IESHIMS.DLL

why?

i am using windows8.1+vs2013

Hav u fixed this problem yet? I have the exact same problem like u do and the last 2 .dll files can not even be found on Google….

Hi;

First, thank you for the tutorial here.

I faces a problem during compiling the Caffe inside Visual Studio 2013 in Windows 7. The VS keeps telling me that I have a syntax error inside “hdf5_output_layer.cpp”. I didn’t change any code inside this one and didn’t find anyone with this problem. This is the list of problems:

Error 18 error C2143: syntax error : missing ‘;’ before ‘<' \src\caffe\layers\hdf5_output_layer.cpp 18 1 Caffe

Error 19 error C2988: unrecognizable template declaration/definition \src\caffe\layers\hdf5_output_layer.cpp 18 1 Caffe

Error 20 error C2059: syntax error : '<' \src\caffe\layers\hdf5_output_layer.cpp 18 1 Caffe

Error 21 error C2039: 'HDF5OutputLayer' : is not a member of '`global namespace'' \src\caffe\layers\hdf5_output_layer.cpp 18 1 Caffe

Error 22 error C2588: '::~HDF5OutputLayer' : illegal global destructor \src\caffe\layers\hdf5_output_layer.cpp 28 1 Caffe

Error 23 error C1903: unable to recover from previous error(s); stopping compilation \src\caffe\layers\hdf5_output_layer.cpp 28 1 Caffe

24 IntelliSense: HDF5OutputLayer is not a template \src\caffe\layers\hdf5_output_layer.cpp 82 1 Caffe

Do you know what causes this problem and how I can solve it?

Best

Mohammad

Hi Mohammed,

it seems you have the same source files as me. You need to remove the comment tags from the header definitions of the hdf5_load_nd_dataset_helper and hdf5_load_nd_dataset functions in the io.hpp file as well as from the definitions of the HDF5DataLayer template class in vision_layers.hpp.

For some reasons this lines are commented-out in this source version.

Best Regards,

Jan

Hi,

I got stuck in contrastive_loss_layer.cpp on this line:

Dtype dist = std::max(margin – sqrt(dist_sq_.cpu_data()[i]), 0.0);

Error 28 error C2780: ‘_Ty std::max(std::initializer_list)’ : expects 1 arguments – 2 provided C:\work\Caffe\caffe\src\layers\contrastive_loss_layer.cpp 56 1 caffe

Error 27 error C2780: ‘const _Ty &std::max(const _Ty &,const _Ty &,_Pr)’ : expects 3 arguments – 2 provided C:\work\Caffe\caffe\src\layers\contrastive_loss_layer.cpp 56 1 caffe

Error 29 error C2782: ‘const _Ty &std::max(const _Ty &,const _Ty &)’ : template parameter ‘_Ty’ is ambiguous C:\work\Caffe\caffe\src\layers\contrastive_loss_layer.cpp 56 1 caffe

Error 26 error C2784: ‘_Ty std::max(std::initializer_list,_Pr)’ : could not deduce template argument for ‘std::initializer_list’ from ‘float’ C:\work\Caffe\caffe\src\layers\contrastive_loss_layer.cpp 56 1 caffe

Can someone help me out?

Thanks!

OK: 0.0 needs to be cast as Dtype(0.0)

Hi,

I did the mnist training and it ran through without a problem. Thank you Neil for this post!

I am now looking for C++ code that does the testing on mnist with one’s own data. It seems to be a common problem for beginners. I saw a few postings on similar topics using python. Can someone provide a pointer for C++?

Thanks!

Paul

Since the input of “__builtin_popcountl” is 64 bit integer. I think it’s better to make such modification, “#define __builtin_popcountl __popcnt64”.

As a matter of fact, some of the tests fail for Visual Studio 13 / 64-bit, if __builtin_popcountl is replaced by __popcnt. With __popcnt64, these tests succeed.

Hi,

I am getting compile error on

this->forward_gpu_gemm(bottom_data + bottom[i]->offset(n), weight, top_data + top[i]->offset(n));

I cannot find the definition of forward_gpu_gemm.

the forward_gpu_gemm needs a fourth parameter bool skip_im2col. I am not sure if is is true or false. By adding true/false, the compile error goes away

The fourth parameter for forward_gpu_gemm should be “false”.

this->forward_gpu_gemm(bottom_data + bottom[i]->offset(n), weight,

top_data + top[i]->offset(n), false);

When using false, the

Test net output #0: accuracy = 0.9907

which is expected. I want to thank Neil for his excellent step by step instructions. This saved me a lot of time.

Hi,

thanks for this tutorial, but i have met some problems.

1. 错误 18 error MSB3721: 命令“”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\bin\nvcc.exe” –use-local-env –cl-version 2013 -ccbin “X:\Program Files (x86)\Microsoft Visual Studio 12.0\VC\bin\x86_amd64″ -IC:\Users\blackpepperX\Desktop\CAFFE_ROOT\include -IC:\Users\blackpepperX\Desktop\CAFFE_ROOT\3rdparty\include -IC:\Users\blackpepperX\Desktop\CAFFE_ROOT\3rdparty\include\openblas -IC:\Users\blackpepperX\Desktop\CAFFE_ROOT\3rdparty\include\hdf5 -IC:\Users\blackpepperX\Desktop\CAFFE_ROOT\3rdparty\include\lmdb -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” -IX:\opencv\build\include -IC:\local\boost_1_56_0 -IC:\Users\blackpepperX\Desktop\CAFFE_ROOT\src\caffe -IC:\Users\blackpepperX\Desktop\CAFFE_ROOT\src -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” -G –keep-dir x64\Debug -maxrregcount=0 –machine 64 –compile -cudart static -g -DWIN32 -D_DEBUG -D_CONSOLE -D_LIB -D_CRT_SECURE_NO_WARNINGS -D_SCL_SECURE_NO_WARNINGS -D_UNICODE -DUNICODE -Xcompiler “/EHsc /W3 /nologo /Od /Zi /RTC1 /MDd ” -o x64\Debug\bnll_layer.cu.obj “C:\Users\blackpepperX\Desktop\CAFFE_ROOT\src\caffe\layers\bnll_layer.cu””已退出,返回代码为 2。 C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\V120\BuildCustomizations\CUDA 7.0.targets 593 9 caffe.

This happens when compile the bnll_layer.cu

2.错误 22 error C2784: “_Ty std::max(std::initializer_list,_Pr)”: 未能从“float”为“std::initializer_list”推导 模板 参数

this happens when compile the contrasive_loss_layer

3.错误 17 error C3861: “_mkdir”: 找不到标识符 C:\Users\blackpepperX\Desktop\CAFFE_ROOT\src\caffe\util\db.cpp 32 1 caffe

this happens when compile the db.cpp

Could u help out? thx!

Please Do not forget to include direct.h…

Thanks for the useful tutorial. I haven finished till the end. I just want to point out that the part starting from GFlags + GLog + ProtoBuf + LevelDB is very confusing. It would be useful if you add a few lines just before it to explain what is going on. Thank you

Thanks for the useful tutorial. I haven finished till the end. I just want to point out that the part starting from GFlags + GLog + ProtoBuf + LevelDB is very confusing. It would be useful if you add a few lines just before it to explain what is going on. Thank you

Thanks for the useful tutorial. I haven finished till the end. I just want to point out that the part starting from GFlags + GLog + ProtoBuf + LevelDB is very confusing. It would be useful if you add a few lines just before it to explain what is going on. Thank you

Hi.

i have a problem, so i need your help.

i want detail explain for setting.

thank you

Thanks for the great tutorial!

I have been using Caffe on linux for a while now, but since I’m new to linux I was always struggling to get things working.

This makes life a lot easier!

I compiled it on windows 7, VS2013, CUDA7.0

Everything works, including my own previous “linux caffe” experiments.

Only problem: it’s quite a lot slower, in the order of 3 times slower.

This is probably due to CUDNN, which I couldn’t get to work.

I have used the latest master branch by BVLC (08 juli 2015) and tried the following things to get CUDNN working:

first attempt with latest CUDNN (cudnn-6.5-win-v2-rc3)

– Add path to CUDNN folder to “additional include dirs”

– Add path to CUDNN folder to “additional library dirs”

– Add cudnn.lib, cudnn64_65.lib to “additional dependencies”

– add “USE_CUDNN” to the preprocessor definitions

– set CUDA C/C++ -> common-> target machine type” to “64 bit”

If I now try to compile any of the cudnn layers, for instance: cudnn_conv_layer.c, I get the following errors:

IntelliSense: declaration is incompatible with “const char *__stdcall cudnnGetErrorString(cudnnStatus_t status)” (declared at line 98 of “D:\toolkits\cudnn_v2\cudnn.h”) d:\caffe\caffe-master\include\caffe\util\cudnn.hpp 17 20 caffe

Error

error MSB3721: The command “”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\bin\nvcc.exe” -gencode=arch=compute_30,code=\”sm_30,compute_30\” –use-local-env –cl-version 2013 -ccbin “C:\Program Files (x86)\Microsoft Visual Studio 12.0\VC\bin\x86_amd64″ -I../3rdparty/include -I../3rdparty/include/openblas -I../3rdparty/include/hdf5 -I../3rdparty/include/lmdb -I../include -I../src -ID:\toolkits\boost_1_56_0 -I”D:\toolkits\opencv-2.4.9\build\include” -I”D:\toolkits\opencv-2.4.9\build\include\opencv” -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” -ID:\toolkits\cudnn_v2 -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” –keep-dir x64\Release -maxrregcount=0 –machine 64 –compile -cudart static -DWIN32 -DNDEBUG -D_CONSOLE -D_LIB -D_CRT_SECURE_NO_WARNINGS -DUSE_CUDNN -D_UNICODE -DUNICODE -Xcompiler “/EHsc /W3 /nologo /O2 /Zi /MD ” -o x64\Release\cudnn_conv_layer.cu.obj “D:\caffe\caffe-master\src\caffe\layers\cudnn_conv_layer.cu”” exited with code 2.

error : declaration is incompatible with “const char *cudnnGetErrorString(cudnnStatus_t)” D:\caffe\caffe-master\include\caffe\util\cudnn.hpp 17 1 caffe

It seems that there are some incompatibilities between CUDNN V2 and caffe CUDNN layers.

If I instead use CUDNN V1 I get some other errors:

IntelliSense: expected a ‘;’ d:\caffe\caffe-master\include\caffe\util\cudnn.hpp 127 1

error MSB3721: The command “”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\bin\nvcc.exe” -gencode=arch=compute_30,code=\”sm_30,compute_30\” –use-local-env –cl-version 2013 -ccbin “C:\Program Files (x86)\Microsoft Visual Studio 12.0\VC\bin\x86_amd64″ -I../3rdparty/include -I../3rdparty/include/openblas -I../3rdparty/include/hdf5 -I../3rdparty/include/lmdb -I../include -I../src -ID:\toolkits\boost_1_56_0 -I”D:\toolkits\opencv-2.4.9\build\include” -I”D:\toolkits\opencv-2.4.9\build\include\opencv” -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” -ID:\toolkits\cudnn_v1 -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” -I”C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v7.0\include” –keep-dir x64\Release -maxrregcount=0 –machine 64 –compile -cudart static -DWIN32 -DNDEBUG -D_CONSOLE -D_LIB -D_CRT_SECURE_NO_WARNINGS -DUSE_CUDNN -D_UNICODE -DUNICODE -Xcompiler “/EHsc /W3 /nologo /O2 /Zi /MD ” -o x64\Release\conv_layer.cu.obj “D:\caffe\caffe-master\src\caffe\layers\conv_layer.cu”” exited with code 2.

error : identifier “cudnnTensorDescriptor_t” is undefined D:\caffe\caffe-master\include\caffe\util\cudnn.hpp 64 1 caffe

error : identifier “cudnnTensorDescriptor_t” is undefined D:\caffe\caffe-master\include\caffe\util\cudnn.hpp 69 1 caffe

error : identifier “cudnnTensorDescriptor_t” is undefined D:\caffe\caffe-master\include\caffe\util\cudnn.hpp 77 1 caffe

error : identifier “cudnnTensorDescriptor_t” is undefined D:\caffe\caffe-master\include\caffe\util\cudnn.hpp 102 1 caffe

It now seems that “cudnnTensorDescriptor_t” can not be found at all, as opposed to an incompatible declaration.

Now ofcourse the question, what am I doing wrong? Did I forget something, or should I use a different version of CUDNN (any of the Release candidates maybe?)

I would be really gratefull if you, or anyone else, could help me out 🙂

Solved it!

Using CUDNN v2, I had to change the following in cudnn.hpp

“inline const char* cudnnGetErrorString(cudnnStatus_t status)”

to

“inline const char * CUDNNWINAPI cudnnGetErrorString(cudnnStatus_t status)”

Now it works great with a speedup of 3x, similiar to linux performance!

Thank you!!!!!

I am able to compile common.cpp but when i try to compile any of blob.cpp, net.cpp or solver.cpp i get the following same error.

Error 24 error C4996: ‘std::_Copy_impl’: Function call with parameters that may be unsafe – this call relies on the caller to check that the passed values are correct. To disable this warning, use -D_SCL_SECURE_NO_WARNINGS. See documentation on how to use Visual C++ ‘Checked Iterators’

Any inputs would be appreciated. Thanks in advance.

Got it fixed adding “_SCL_SECURE_NO_WARNINGS” in preprocessor definitions (Configuration Properties -> C/C++ -> Preprocessor -> Preprocessor Definitions ).

Now all the files in layers folder are getting compiled but contrastive_loss_layer.cpp the following three error appears when i try to compile this.

Error 23 error C2784: ‘_Ty std::max(std::initializer_list,_Pr)’ : could not deduce template argument for ‘std::initializer_list’ from ‘float’

Error 24 error C2780: ‘const _Ty &std::max(const _Ty &,const _Ty &,_Pr)’ : expects 3 arguments – 2 provided

Error 26 error C2782: ‘const _Ty &std::max(const _Ty &,const _Ty &)’ : template parameter ‘_Ty’ is ambiguous

Thanks in advance.

Solved this by replacing

Dtype dist = std::max(margin – sqrt(dist_sq_.cpu_data()[i]), 0.0);

with

Dtype dist = std::max(margin – sqrt(dist_sq_.cpu_data()[i]), Dtype(0.0));

Now i am able to compile every file but at the end when i try to compile all of the caffe project i get 242 errors like

Error 2 error LNK2019: unresolved external symbol “void __cdecl caffe::caffe_gpu_axpy(int,float,float const *,float *)” (??$caffe_gpu_axpy@M@caffe@@YAXHMPEBMPEAM@Z) referenced in function “public: void __cdecl caffe::Blob::Update(void)” (?Update@?$Blob@M@caffe@@QEAAXXZ) D:\z_caffe\caffe-master\caffe\caffe\blob.obj caffe

Error 3 error LNK2001: unresolved external symbol “void __cdecl caffe::caffe_gpu_axpy(int,float,float const *,float *)” (??$caffe_gpu_axpy@M@caffe@@YAXHMPEBMPEAM@Z) D:\z_caffe\caffe-master\caffe\caffe\solver.obj caffe

Hi,

I followed your guidelines to install caffe on windows. I used cmake to create vs solution and I compiled caffe statically. When I try to run the lenet training example, the db conversion works fine, but the training fails complaining about the layer type. Layer_factory.hpp 77 check failed, registry count type = 1; unknown layertype. Am I missing something? Is there a workaround to resolve this?

thanks!

phani

Folks, after compiling the files, I am getting a huge bunch of errors of type LNK 2001. Indeed is something about 165 unresolved externals like the one showed below:

error LNK2001: unresolved external symbol “__declspec(dllimport) public: __thiscall google::base::CheckOpMessageBuilder::~CheckOpMessageBuilder(void)” (__imp_??1CheckOpMessageBuilder@base@google@@QAE@XZ) C:\Users\mhsc\Documents\Visual Studio 2012\Projects\caffe-master\caffe-master\caffe\caffe\solver.obj

I don´t know what I am missing and i´m going crazy…

Please, any suggestion is very welcomed and I thank you so much.

I think thats related to google glog. Pls check if the right library is specified in the linker options of caffe

Thanks for this helpful guide! One comment I would make is that it’s important to get the runtime library correct across all of the packages. As an example, I had downloaded OpenCV 3.0.0 and found the the only location for the OpenCV libs mentioned in the guide were in the staticlib dir, which doesn’t work well with the other precompiled 3rd-party libs provided. Fortunately, OpenCV 2.4.11 has the proper libs.

Also, is there an alternative location to get the OpenBLAS package? SourceForge seems to be having troubles at the moment. I’ve found some other OpenBLAS distributions (such as openblas.net) but it’s not clear they are related to the one from SourceForge.

Thanks!

Jeff

Hi, I’ve successfully finished your tutorial, it works fine. However, when I try to run example of cifar10, it always show”Check Failed: ReadProtoFromBinaryFile”. Have u ever bumped into such a situation? Can u provide me with some suggestions? Thanks in advance:D

Hi. I’m not sure what happened here. Maybe the function “ReadProtoFromBinaryFile” in io.cpp file failed. In this line:

int fd = open(filename, O_RDONLY | O_BINARY);

the “O_BINARY” sign is necessary in windows environment.

If you still cannot find what went wrong, you may try to follow my next tutorial to start the training process from Visual Studio. Maybe debug mode will show you the line that generated the error.

Hi,I followed your guidelines to install caffe on windows. Now all the files in util folder are getting compiled but signal_handler.cpp the following errors appears when i try to compile this.

SIGHUP,sigaction,SA_RESTART,sigfillset not declared,not difined

Have u ever bumped into such a situation? Can u provide me with some suggestions? Thanks in advance:D

Hi,

Can someone please explain in detail how can I use one of the famous pretrained nets (e.g AlexNet)?

That includes:

1) How can I download the pretrained net? (Is it already included in the windows library?) I didn’t find any suitable script in the caffe-windows folder.

2) How can I give an input to the net? (like RGB of pixels in an image)- I want to give the input myself

3) How can I extract features from “middle” layers of the net? (when I test the net after I give my input)

This information will be very helpful to me, thanks in advance!

Hi,

Can somebody explain why SIGHUP,sigaction,SA_RESTART,sigfillset not declared is not difined in file signal_handler.cpp.

Thanks in advance.

Hi,I meetting this question that days too.SIGHUP,sigaction,SA_RESTART,sigfillset not declared,Do you had solve this question?Thanks in advance

Maybe you can see this , https://msdn.microsoft.com/en-us/library/61af7cx3.aspx , just make the declaration enclosed in a block

Most of caffe windows repositories seems to be out of date and not maintained. So, I converted the latest version of caffe thanks to Neil Z. Shao and this blog post. http://github.com/woozzu/caffe

Hi,

Has anyone tried HDF5 file input on Windows? I got a lot of errors using Neil’s 3rd party library. I tried to fix it by re-building the hdf5 libraries from a more recent release. But I got a lot of unsatisfied externals.

Detailed messages are attached here:

………….. Caffe error at runtime ……………………………

I0728 15:03:48.645072 8684 net.cpp:368] data -> label

I0728 15:03:48.647073 8684 net.cpp:120] Setting up data

I0728 15:03:48.647073 8684 hdf5_data_layer.cpp:80] Loading list of HDF5 filenam

es from: hdf5_classification/data/test.txt

I0728 15:03:48.648072 8684 hdf5_data_layer.cpp:94] Number of HDF5 files: 1

HDF5-DIAG: Error detected in HDF5 (1.8.14) thread 0:

#000: ..\..\src\H5Dio.c line 173 in H5Dread(): can’t read data

major: Dataset

minor: Read failed

#001: ..\..\src\H5Dio.c line 550 in H5D__read(): can’t read data

major: Dataset

minor: Read failed

#002: ..\..\src\H5Dchunk.c line 1872 in H5D__chunk_read(): unable to read raw

data chunk

major: Low-level I/O

minor: Read failed

#003: ..\..\src\H5Dchunk.c line 2902 in H5D__chunk_lock(): data pipeline read

failed

major: Data filters

minor: Filter operation failed

#004: ..\..\src\H5Z.c line 1357 in H5Z_pipeline(): required filter ‘deflate’ i

s not registered

major: Data filters

minor: Read failed

#005: ..\..\src\H5PL.c line 298 in H5PL_load(): search in paths failed

major: Plugin for dynamically loaded library

minor: Can’t get value

#006: ..\..\src\H5PL.c line 466 in H5PL__find(): can’t open directory

major: Plugin for dynamically loaded library

minor: Can’t open directory or file

F0728 15:03:48.665073 8684 io.cpp:273] Check failed: status >= 0 (-1 vs. 0) Fai

led to read float dataset data

*** Check failure stack trace: ***

…………. Error linking with new HDF5 library ………………………

1>—— Build started: Project: caffe, Configuration: Release x64 ——

1>C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\V120\Microsoft.CppBuild.targets(364,5): warning MSB8004: Output Directory does not end with a trailing slash. This build instance will add the slash as it is required to allow proper evaluation of the Output Directory.

1> Creating library ../bin\caffe.lib and object ../bin\caffe.exp

1>libhdf5.lib(H5Z.obj) : error LNK2001: unresolved external symbol SZ_encoder_enabled

1>libhdf5.lib(H5Zdeflate.obj) : error LNK2001: unresolved external symbol inflate

1>libhdf5.lib(H5Zdeflate.obj) : error LNK2001: unresolved external symbol inflateEnd

1>libhdf5.lib(H5Zdeflate.obj) : error LNK2001: unresolved external symbol compress2

1>libhdf5.lib(H5Zdeflate.obj) : error LNK2001: unresolved external symbol inflateInit_

1>libhdf5.lib(H5Zszip.obj) : error LNK2001: unresolved external symbol SZ_BufftoBuffCompress

1>libhdf5.lib(H5Zszip.obj) : error LNK2001: unresolved external symbol SZ_BufftoBuffDecompress

1>../bin\caffe.exe : fatal error LNK1120: 7 unresolved externals